I have been working on a requirement recently that involves importing a fixed width character file in D365 F&O via DMF by way of Logic Apps. There had been a couple on challenges on this one and I have Googled / Binged about it and found forums where people have had to find alternative solutions, thinking that it’s impossible (for example, this question from the Microsoft Dynamics Community Forum).

I’ve also read many similar blog posts where some people say that they have asked the customer to change the file format. Like this one from before.

We did complained to Microsoft about one year ago because we had the same issue, and as I see it stills hasn’t been fixed, so I wouldn’t believe it will be at least solved in a short time. Sorry to not bring to you a solution, in our case we had to change the file format.

But I’ve been wondering why the customer has to change the file format? Can the developer not create a solution?

D365 F&O Limitations

As a known limitation, if you’re using the Data Management Framework (DMF), the incoming/inbound file to D365 F&O can’t be a fixed character file, despite having the option. This is primarily because the DMF doesn’t know how to split the characters per column even though you configure a new datasource file format and say it’s fixed width. However, for your outbound files, this works fine as you will be handling the data manipulation operations using your fixed size EDTs and write the data on to a file. Oh, even for your outbound files there’s a bug with row delimiters which I will write about another time.

Coming back to the inbound files, as mentioned above on the first link, the symptom is the source table containing one or more rows based on where there are spaces within your file, not by using fixed width columns. Which means, by default, the system assumes you need to break the source file into different columns based on where the space is. For example, let’s say my incoming plain text file looks like this:

AAAME123WORKS THIS IS A TEST COLUMN DATA 12345 JEVERYTHING IS POSSIBLE

AAAME421SUPER EVERYTHING IS POSSIBLE 56789 AFOR A DEVELOPER The schema should be:

| Field name | Character length |

|---|---|

| Code | 3 |

| Name | 5 |

| Some text | 6 |

| Description | 30 |

| ID | 8 |

| Level | 1 |

| Long description | 29 |

Such data poses the following challenges:

- DMF will not create columns as per the configuration used when you setup the file import.

- The mapping will not be generated automatically for inbound files even if data entities contain EDTs with fixed width field sizes.

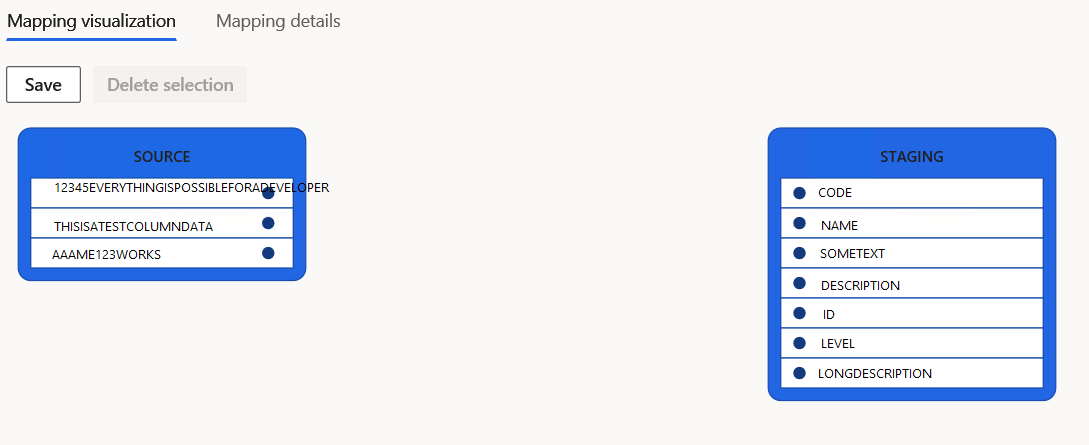

Continuing with the above example, DMF’s suggested mapping is as follows:

So what’s the workaround?

I decided to convert the incoming file into a file with delimited columns, specifically commas, to create a CSV file. So what’s the challenge now?

In my case, the input file comes from a third-party using Logic Apps –“ when a new file is added or modified trigger”. You can follow standard Microsoft documentation on MSDN and create this trigger, get file metadata, get file content.

But how to you manipulate this file content and convert it into columns? How can you read file that has no delimiters and parse it?

Well, you can implement this using regular expressions (RegEx) but does Logic Apps support it? In short, yes. You can use the block – inline code. Inline Code lets you write JavaScript code and, therefore, implement RegEx to split the rows into columns based on the field length, and separate these with commas. The output of this block can be used to create a CSV file.

If there’s a solution, what is the challenge now?

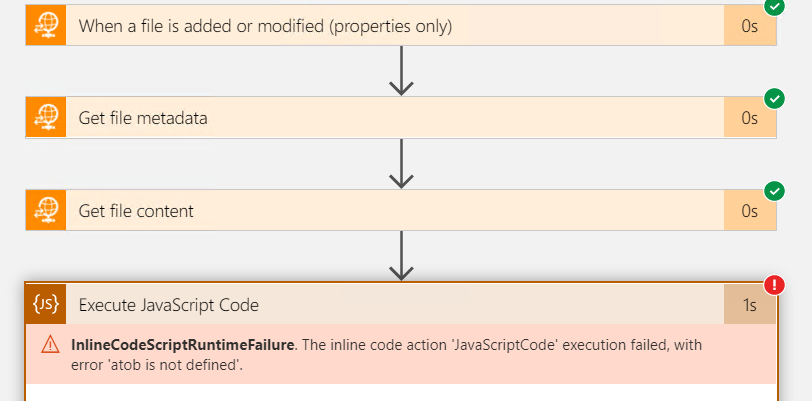

The next challenge I faced was decoding the file contents of the “Get file content” block that was encoded in Base64. Normally this is quite trivial in JavaScript in that atob() can be employed. Unfortunately, access to atob() is limited by scope (typically to window when within a web browser) and, as the backend of the block is Node.js, we are dependent on global scopes. Alternatively, one can use the Buffer object — used by atob() and btoa() — but, again, a mixture of Node.js and Azure limitations result in require() being disallowed and, for us, the requirement of an alternative solution.

Access to the Buffer object may depend on the Logic App subscription (untested). If you are using a Single tenant subscription which is the Logic App (Standard) resource type, then more recent versions of Node.js are available and Buffer may have made it into the global scope.

In my case, by using a multi-tenant subscription — which is the pay-as-you-go Consumption resource type — only Node.js v8.11.1 was available. Availability of these functions can be determined by seeing the resultant error messages — if Buffer is undefined, then it’s unavailable to you (see below screenshot).

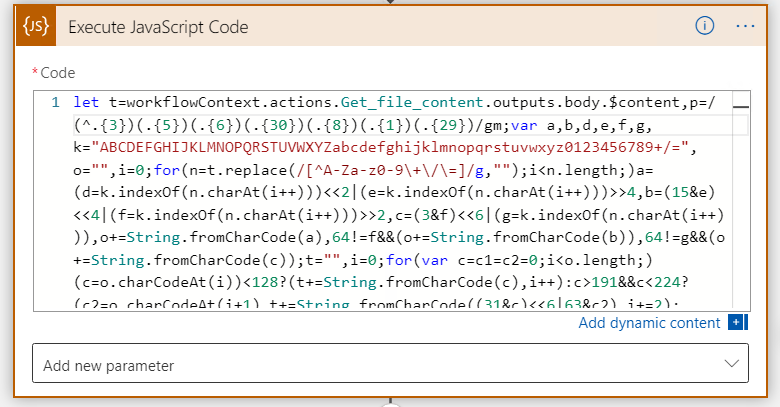

This requires a crazy novel solution! How about manually decoding Base64? The result? Check this out…

I can guess what you’re thinking… it’s “unreadable” minified code: almost every variable is condensed, and spaces and new lines are removed. This is because Logic Apps imposes a limit of 1024 characters in the code block. Exceeding this character limit results in an error saying that the character limit exceeded.

In its expanded form, this appears as follows:

let t = workflowContext.actions.Get_file_content.outputs.body['$content'];

let p = /(^.{3})(.{5})(.{6})(.{20})(.{8})(.{1})(.{29})/mg;

// Begin Base64 decoding (as atob isn't available)

var k = "ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz0123456789+/=";

var o = "";

var a, b, c;

var d, e, f, g;

var i = 0;

n = t.replace(/[^A-Za-z0-9\+\/\=]/g,"");

while (i < n.length)

{

d = k.indexOf(n.charAt(i++));

e = k.indexOf(n.charAt(i++));

f = k.indexOf(n.charAt(i++));

g = k.indexOf(n.charAt(i++));

a = (d << 2) | (e >> 4);

b = ((e & 15) << 4) | (f >> 2);

c = ((f & 3) << 6) | g;

o += String.fromCharCode(a);

if (f != 64)

{

o += String.fromCharCode(b);

}

if (g != 64)

{

o += String.fromCharCode(c);

}

}

t = "";

i = 0;

var c = c1 = c2 = 0;

while ( i < o.length )

{

c = o.charCodeAt(i);

if (c < 128)

{

t += String.fromCharCode(c);

i++;

}

else if((c > 191) && (c < 224))

{

c2 = o.charCodeAt(i+1);

t += String.fromCharCode(((c & 31) << 6) | (c2 & 63));

i += 2;

}

else

{

c2 = o.charCodeAt(i+1);

c3 = o.charCodeAt(i+2);

t += String.fromCharCode(((c & 15) << 12) | ((c2 & 63) << 6) | (c3 & 63));

i += 3;

}

}

// End of base64 encoding

t = t.replace(p, "$1,$2,$3,$4,$5,$6,$7");

return t;The conversion of Base64 to ASCII/binary (Base256) is essentially the process of moving from 6-bit space (each character representing a value from 0 to 63 with = used for padding) to 8-bit space. This essentially is a mapping of four bytes in Base64 to three bytes in ASCII/binary. This is accomplished by evaluating the character value in 6-bit space, combining it with adjacent characters. There’s a special case however: when the content doesn’t fully fit into four bytes in the encoding process, the encoder pads the remainder of the message with the = symbol.

Back to the RegEx problem

Where were we before the Base64 detour? Ah yes, using regex to create CSV files. You may have caught a glimpse at the code used within the inline code block:

let p = /(^.{3})(.{5})(.{6})(.{20})(.{8})(.{1})(.{29})/mg;Is the RegEx used to define the seven columns and their widths (defined in braces) and each one is within a RegEx capture group (with the global and multiline modifies enabled). This means that when we run t.replace(), the corresponding capture group can be called upon. Don’t forget to add the commas between the called capture groups:

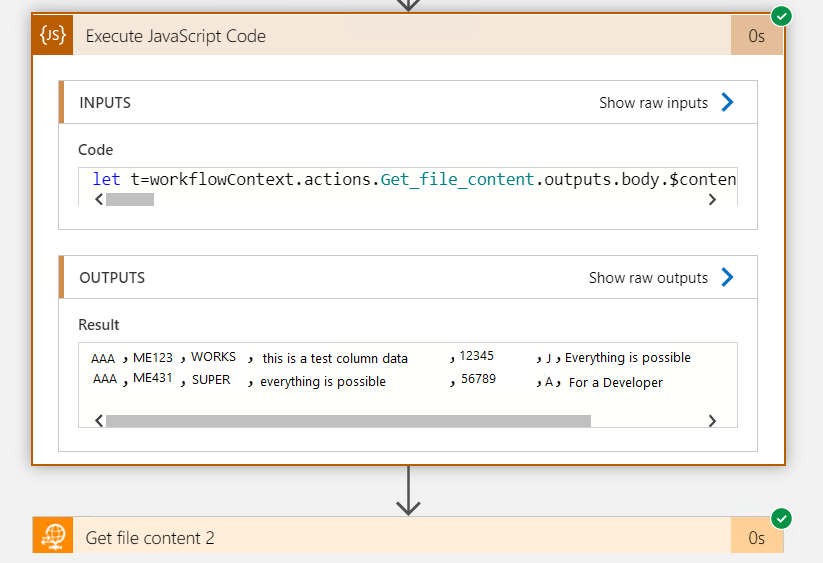

t = t.replace(p, "$1,$2,$3,$4,$5,$6,$7");The output can then be routed into a Create File block whose contents is “Result”, the return-ed data from the Inline Code block.

So, one you have done all of these, you can see the output is nicely split into columns

Now you can add a header, concatenate a filename with the .csv extension and run the Logic Apps trigger. You should then see your new file generated as a .csv and it is so cool. Happy learning!